Fresh Thoughts #72: Are We Under Attack From Voice AI Scams?

- Newsletter

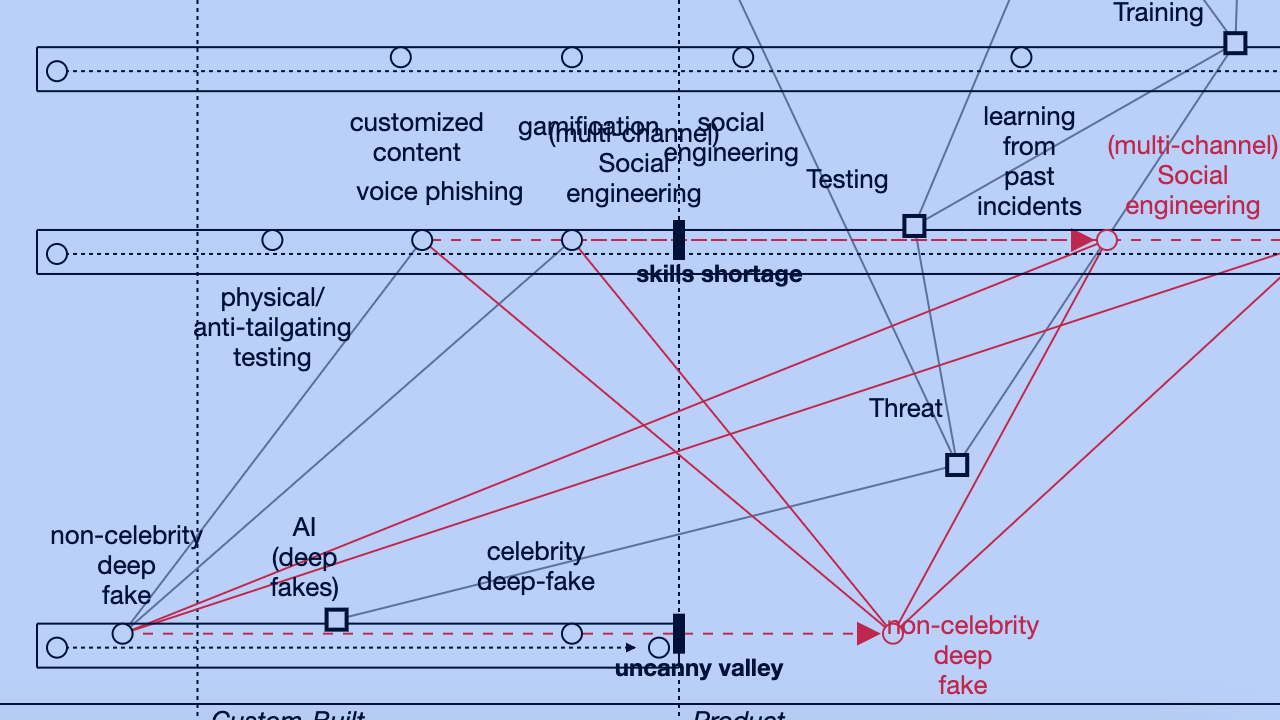

Using Wardley Maps to Understand Voice Deep Fakes

Wardley Mapping has been a massive part of my career.

It even prompted me to self-fund an 18-hour journey to spend a weekend in Portland, Oregon and attend a half-day workshop on mapping - but that's another story.

Simon Wardley created Wardley Mapping, which - at a high level - plots a value chain of all the products, ideas, and practices (the components) needed to deliver something valuable to a customer against how "evolved" each component is.

There are many more layers of nuance in Wardley Mapping, including strategic gameplay, exploiting resistance to change and team structure. Simon's book is a great place to start and a worthy rabbit hole to get lost in.

Mapping Security Awareness

In early May 2023 - I joined 60 people to map various aspects of cybersecurity as part of Simon's latest research project.

As an experienced mapper, Simon requested I lead one of the breakout groups mapping security awareness; a request I wasn't overly enthusiastic about, as I thought it was a solved problem. But I was needed as a coach and guide to help the group get their ideas on paper.

What emerged was startling — and reinforced that the most significant benefit of mapping is the conversations that result from trying to lay out a value chain.

One of the most exciting conversations in the project was on voice-based social engineering. Currently, this requires skilled humans who charge premium prices - resulting in an expensive and unscalable proposition.

"But what about deep fakes? In India, we saw politicians have used deep fakes to impact elections..."

And so began a fascinating conversation about generative AI and deep fakes.

How easy is it to create a deep fake?

We've seen ChatGPT's generative AI write text in the style of famous authors...

And Midjourney's generative AI create images in the style of famous painters...

So creating deep fakes with generative AI seems pretty mature. But what about sounding like someone?

Celebrity Deep Fakes

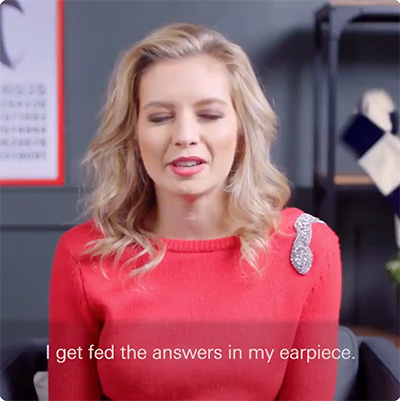

The first almost convincing voice and video deep fake I remember seeing was HSBC's hilarious advert of Rachel Riley confessing to being bad at maths.

But that was over five years ago.

More recently, Jolly's I used AI to clone my Best Friend's voice and released an Audiobook provided a light-hearted view of the art of the possible.

And Corridor Crew's I Stole my Friend's Voice With AI covered the mechanics of impersonating a team member's voice.

But all of these have one thing in common...

They all have lots of audio to train the AI model.

So what about when the deep fake isn't a celebrity?

What about when it's a CEO or managing director - and there isn't much voice training data?

Deep Fakes of You and Me

I posed the question in the group chat and, within 2 minutes, received a link to Microsoft's new AI can simulate anyone's voice with 3 seconds of audio.

Game over?

Well... not quite.

Finding and reading the actual paper being cited took several more hours. And, as ever, the devil is in the detail.

The research mimicked a single, short utterance using an AI-generated voice. And while the results were better than previous methods - there remains a gap to the actual person's voice - on both similarity to the speaker and naturalness of the speaking.

This work is impressive, but to be clear - we aren't at the point of convincing deep fakes for you and me.

Our deep fakes aren't as good as celebrity deep fakes... yet.

As a group, we predict that non-celebrity deep fakes will happen - but it will take time. How long we don't know, which is why it is vital to look for indicators that the next step in voice-based deep fake development is taking place - both on the defensive and adversarial sides.

But no sooner had I started looking for indicators, I began to see a very different narrative playing out in the media.

Next week, in Part 2, I'll cover how a single assertion from a concerned friend resulted in US Congressional testimony and headlines stating unsubstantiated facts like "AI clones child's voice in kidnapping scam".