Fresh Thoughts #73: Deep Fakes - The End of the World is Nigh...

- Newsletter

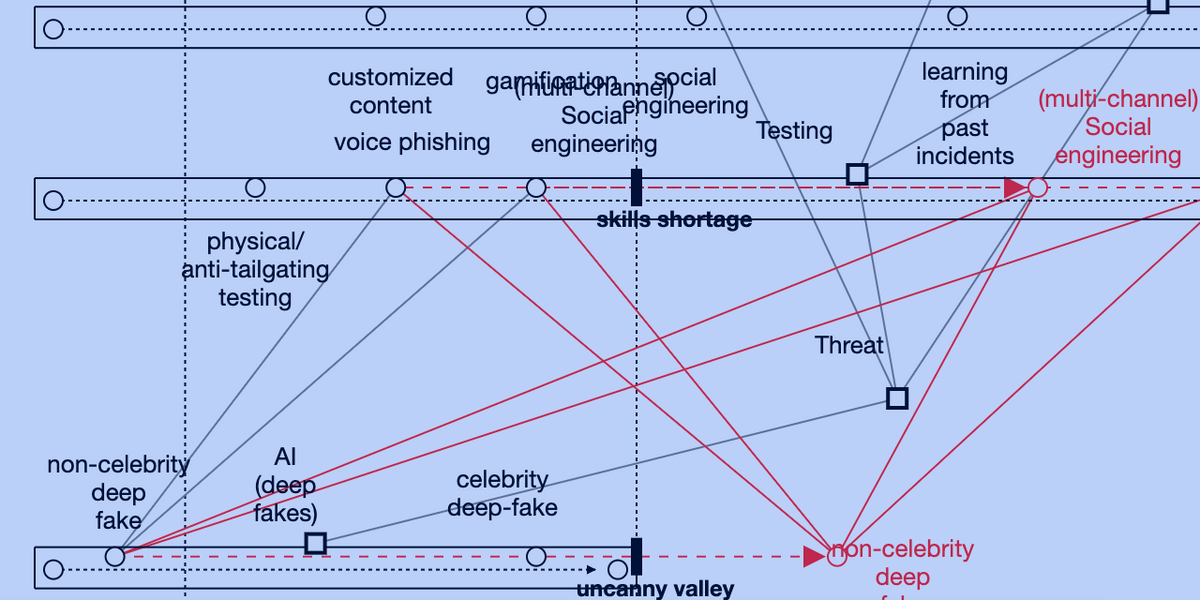

Last week I wrote about using Wardley Maps to understand voice-based deep fakes.

Are they a threat right now?

I concluded: No - not right now for everyday folk...

But you may not believe that from what you read in the media.

Deep Fakes - The End of the World is Nigh...

"When recruits came into the section - they always had the same first task.

What's the latest sordid gossip going around the base?

[..]

You have 24 hours to find out what actually happened.

Go!"

That was one of many stories an old Navy intelligence officer told me at the start of my career. It's a brilliant story - and exercise - as it encapsulates the very essence of what it means to collect intelligence.

The information doesn't have to be provable in court - so hearsay is acceptable. But also, if you only hear the story from one source or it is third-hand - it is likely worthless.

I was reminded of this story shortly after I started looking for signs of voice-based AI deep fakes.

In a matter of days, I started seeing information signals in this area - likely because I was more aware of them. But also because of the current climate of fear around what AI and deep fakes could mean.

Source 1: Voice Scams Are Everywhere

The day after concluding the Wardley Mapping session - McAfee published a report on voice-based AI scams - using the same paper we'd reviewed. Their survey stated that 24% of adults had personally experienced or had known someone to experience an AI-voice scam.

I am sceptical based on the report's loose language, broad definitions, and vagueness.

This will be the case - but is it the case right now?

I'm not convinced - it sounds like selling security products on the back of fear, uncertainty and doubt.

Source 2: The Person on the TV Said It...

A few days later, I saw:

Martin Lewis - a well-publicised personal finance expert in the UK - "said he is now hearing of an extremely sophisticated new type of scam emerging involving the use of AI". In the following sentence, he states, "They are using AI to manipulate their voice [..] the scammer will talk to them in their child's voice."

Erm... there's a switch in language there.

It starts as hearsay...

But it's being reported as fact by the end... 🤔 🤨.

Where is this coming from?

Source 3: Arizona - Getting Closer to the Origin

A few days after Martin's comments, I read about the now widely reported story of a mum in Arizona who was tricked into believing that her daughter had been kidnapped. The headline read, US mother gets call from 'kidnapped daughter' - but it's really an AI scam.

How do we know that this was AI-based?

"Another parent with her informed her police were aware of AI scams like these."

That's it.

The initial reports and subsequent congressional testimony cover an undoubtedly alarming and fear-inducing situation. And that's the point.

There is a chance that criminals have more advanced AI capabilities than Microsoft has published. But this sounds like a new development in the notorious 'Hi Mum and Dad' scam found on WhatsApp - something my sister was warned about while living in Mexico City in 2004.

Put simply fear leads to cognitive dissonance which in turn enables forced compliance - people saying and doing things that are against their better judgement.

This is what scammers prey upon.

Source 4: The London Evening Standard

And so, when it came time to report the mother's congressional testimony, the London Evening Standard managed to turn the now firmer (but still vague statement) of "[I was told] 911 is very familiar with an AI scam where they can use someone's voice" into the fact asserting headline of AI clones child's voice in kidnapping scam.

Source 5: What the FBI Actually Said...

And so, finally, there's one loose thread to tidy up. These stories are rooted in the belief that police are aware of AI scams. So what has law enforcement said about this?

I can't find any original information published by Arizona law enforcement, but the FBI did publish a Private Industry Notification on 'synthetic content' (deep fakes) in March 2021.

Which predicted:

"Malicious actors almost certainly will leverage synthetic content for cyber and foreign influence operations in the next 12-18 months. [..] the FBI anticipates it will be increasingly used by foreign and cyber actors for spearphishing and social engineering."

The evidence cited in the notification comes from Russian and Chinese state-sponsored actors. With specific guidance on how to spot manipulated images - but no mention of voice-based deep fakes.

A more recent Public Service Announcement from the FBI has continued this trend highlighting a rise in manipulating images and videos to create explicit content for extortion attempts.

Again there is no reference to voice-based deep fakes.

Source 5: What the FBI Actually Said...

But for my Wardley mapping sessions, the research conversations, the detailed study in this area, and the careful reading of media articles - I may have believed we were already under attack from voice-based AI. And that it's only a matter of weeks before each of us will receive a ransom demand from a loved one...

We will almost certainly arrive at this place...

But as the group suspected and the FBI reinforced - that time is not now.